How to Build a Data Center and Keep the Lights On

The data center building process is extremely complex, requiring careful planning with input from experts in wide-ranging fields.

Data Centers 2019

There are many factors that must be addressed when designing and building a data center. For starters, it’s all about power — finding it and managing it.

Finding the Power

Data centers require an incredible amount of electricity to operate, and this electricity often requires the direct intervention of regional utilities in order to work. Energy infrastructure needs to be shifted, power lines need to be run, and redundancies need to be established. The most secure data centers have tw.o separate feeds from utilities, so that if something happens to one of the lines — like an unexpected squirrel attack — the center doesn’t immediately lose all of its functionality.

Coordinating that takes a lot of effort, and often the clout of a large corporation in order to get anywhere. But even the big players need to check the policies of utilities and local governments in any area in which they are planning on building a data center, to make sure that they will be able to establish those inputs. Because without that redundancy, data centers can be vulnerable to power outages that could result in not only the loss of critical customer data, but also a negative impact on the brand of the data center owner.

The price and availability of that power are also incredibly important considerations because a data center is going to be a large draw at all times. With a significant amount of power going into computing, and even more going into cooling computers down, it’s no surprise that data centers are using more than 1.8 percent of the power of the entire United States. Again, companies planning data centers need to work with local governments and utilities for subsidies and deals that can make that energy easier to afford.

Keeping the Lights On

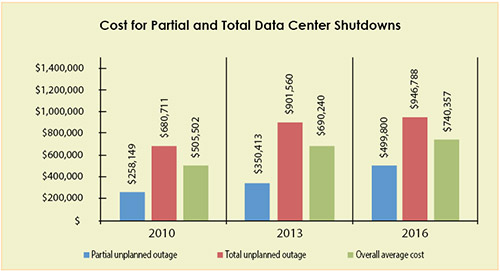

Much of the support infrastructure in data centers is focused on making sure that their power cannot be interrupted. According to research by the Ponemon Institute, the average cost of a data center outage in 2016 stood at $740,357, up 38 percent from when the report was first developed in 2010. That’s $8,851 per minute of lost revenue and unproductive employees (“e-mail is down, time to play Fortnite!”). A great deal of engineering attention has therefore been paid to keeping data centers operational in any kind of crisis.

Uninterruptible power supplies (UPS) — powerful batteries that can start providing power almost instantaneously — are critical for this effort. They ensure that in an emergency, power comes back on in milliseconds, instead of seconds or minutes that could result in the loss of data or functionality for thousands of computer systems. But most UPS systems don’t serve as backup power for long. They simply don’t have the kind of power storage capacity that it takes to power a data center for more than a matter of minutes. In order to keep data centers fully running without utility power, data center operators usually turn to large diesel-powered generators, stocked with 24-48 hours of fuel at all times, in case they’re needed.

All of this redundancy is required because of the incredible amount of energy that data centers use. But the other key factor in a data center’s success is the efficiency with which that energy is used. That starts with the organizational strategy used for cooling.

Staying Cool

Data centers are carefully planned structures. Every square foot needs to contribute to the wider goals of powerful and efficient computing. You can’t just slam server racks together, because their placement needs to fit in with the cooling system used to prevent overheating.

Data centers run hot, and today’s advances in High-Performance Computing (HPC) mean that they are using as much as five times more energy than they used to. This makes a cooling solution one of the most important decisions that a data center operator can make.

By far the most common data center cooling method involves airflow, using HVAC systems to control and lower the temperature as efficiently as possible. These systems typically use:

Aisle arrangement — This is an organizational strategy where hot and cold air is directed with the placement of server racks. Cold-air intakes are directed toward air-conditioning output ducts, and hot-air exhausts are directed toward air-conditioning intakes. This is used to isolate hot and cold environments in the data center and make controlling airflow and temperature easier. Containment systems, whether physical barriers or plenums under the racks, are also used to separate the hot and cold aisles, to prevent temperature mixing and energy inefficiencies.

Data centers have gone from being almost hardly noticed to one of the most important pieces of infrastructure in the global digital economy. Rack placement — Placement of components and computing systems is also an important factor in cooling because proper placement can prevent hot air from being dispersed into problematic areas. When you are creating a controlled environment for temperature and airflow, you need heat to be dispersed into the right zone (usually one side of the server rack). It’s important, therefore, to prevent that hot air from being blasted out of the top of the server rack, even though that is often the hottest part. Placement of components on the server rack is a key way of combating this. Placing the heaviest — and hottest — components on the bottom of the rack makes it much more difficult for the heat to travel all the way to the top of the server rack to be dispersed. This, in combination with blanking panels, helps to prevent heat from moving into the wrong area and making the cooling solution less efficient.

Blanking panels — Another simple, yet elegant solution that data center operators have been using for years, these simple plastic barriers are placed in empty spots in servers, covering areas where heat may leak out.

High levels of monitoring — Monitoring is, of course, incredibly important with a system as complex as a data center. Small problems can cause thousands of dollars in energy inefficiencies because of the 24/7 nature of data center operation. This is why machine learning and AI are becoming tools that data center operators use to monitor and manage their data center environments every minute of every day. Google, for example, has already stated publicly that it is using AI to optimize data center efficiency and cool its data centers autonomously. The system makes all the cooling plant tweaks on its own, continuously, in real-time, delivering savings of up to 30 percent of the plant’s energy annually.

Rise of Liquid Cooling

While liquid cooling has historically been the domain of enterprise mainframes and academic supercomputers, it is being deployed more and more in data centers. More demanding workloads driven by mobile, social media, AI, and the IoT are leading to increased power demands, and data center managers are scrambling to find more efficient alternatives to air-based cooling systems.

Building a data center is about executing an extremely complex plan, with input from experts in wide-ranging fields. The liquid cooling approach can be hundreds of times more efficient and use significantly less power than typical HVAC cooling systems. But the data center market is still waiting for some missing pieces of the puzzle, including industry standards for liquid-cooling solutions and an easy way for air-cooling data centers to make the transition without having to manage two cooling systems at once. Still, liquid cooling will likely become the norm in years to come, as the growing need for more efficient cooling shows no signs of slowing.

Other Critical Success Factors

Security — Security is critically important in any space that contains that much sensitive data, and this needs to be considered in a data center’s construction. Data centers often have multiple layers of security, requiring key cards and sometimes biometric information in order to gain access.

Cable management — Constructing a successful data center requires the establishment of a cable management system that will be easy to manage and upgrade going forward. This is less a safety issue and more of a key consideration in avoiding an organizational nightmare. Data center operators across the globe know the painful difficulty of trying to reign in cable management issues once they start getting out of control.

In Sum

Building a data center is about executing an extremely complex plan, with input from experts in wide-ranging fields. Firms thinking about building their own data center should consult with experts who have dealt with their specific difficulties before, to make sure that all of these core areas can be built without incident. Modern data centers are planned down to the last wire on Building Information Management (BIM) applications and similar software, so that the outcome is as guaranteed as possible before the first wall is erected. Data centers are key arteries of the digital economy, funneling the data of the modern economy between consumers, companies, governments, and citizens. That takes a lot of energy!

Project Announcements

DHL Supply Chain-Vantage Data Centers NV11 Plan Nevada Operations

03/26/2024

Google Plans Cedar Rapids, Iowa, Data Center

03/25/2024

Google Plans Kansas City, Missouri, Data Center

03/22/2024

Meta Plans Rosemount, Minnesota, Data Center

03/18/2024

Ferroglobe Plans Wallace, South Carolina, Production Operations

03/14/2024

Amazon-AWS Plans Madison County, Mississippi, Data Center Operations

01/26/2024

Most Read

-

2023's Leading Metro Locations: Hotspots of Economic Growth

Q4 2023

-

2023 Top States for Doing Business Meet the Needs of Site Selectors

Q3 2023

-

38th Annual Corporate Survey: Are Unrealized Predictions of an Economic Slump Leading Small to Mid-Size Companies to Put Off Expansion Plans?

Q1 2024

-

Making Hybrid More Human in 2024

Q1 2024

-

Manufacturing Momentum Is Building

Q1 2024

-

20th Annual Consultants Survey: Clients Prioritize Access to Skilled Labor, Responsive State & Local Government

Q1 2024

-

Public-Private Partnerships Incentivize Industrial Development

Q1 2024