In 2015, we wrote about the types of various data center operators, characteristics of each, and relevant site selection considerations.But our increased reliance on data and interdependence between operator types have led to a hybrid model of operations today.

While data center and information technology terminology acronyms can be complex, we often use metaphors to help bridge the communication gap between project stakeholders. For data centers, we frequently use cars as a comparison. Most people know how to drive a car, but not everyone knows how the various parts work together — and even fewer know how to fix them without assistance.

Previously in 2015, we sought to define the varying levels of data centers as we saw fit.

Those three types are:

- Enterprise data centers (EDCs) — Traditional organizations

(e.g., banking, healthcare, etc.) - Internet data centers (IDCs) — Large or hyperscale, Internet-centric

(e.g., search engines) - Third-party operators (3POs) — Provide data center facilities and/or services

(e.g., colocation, managed services providers (MSPs), etc.)

So, if the three (3) primary data center types are still the same as identified in our 2015 article, what’s changed? Below are developments that have impacted the data center industry segment:

The Great Consolidation

In the past, many information technology (IT) teams operated servers within their data centers with a limited number of applications per server. Average server utilization was low, but those servers were always on, always running, and consuming electricity. This is like leaving your car running in the garage over night because you may use it tomorrow.

Virtualization is a technology that allows IT teams to now operate multiple operating systems (virtual machines, or VMs) on a single physical (host) server. While the technology been around for longer than the last five years, virtualization is now more widely used, which has decreased organizations’ overall IT footprint.

According to Spiceworks’ 2020 State of Virtualization Technology’s research survey of 530 IT decision-makers, server virtualization is used by 92 percent of businesses. Interop’s 2019 State of IT Infrastructure Report identified that just 3 percent of those surveyed expected to have no servers virtualized in the next 12 months, while 42 percent expected to have over 50 percent of servers virtualized. Furthermore, according to an Uptime Institute survey of 250 C-level executives in 2019, 51 percent replied that virtualization had a greater impact on demand than cloud at only 32 percent.

Thus, data centers that used to require thousands of square feet may now be consolidated into a small fraction of that area. One of our clients was able to achieve over an 80 percent reduction off their existing data center’s footprint through their consolidation efforts, which therefore led to a reduction in their capex and operating expenses.

Cloud: The Adoption of — Not “Move To” — Cloud Services

One of the most common mistakes is the reference to cloud services as “The Cloud.”

“Cloud” can be private or public, with the latter being a reference to the “_ as-a-Service (_aaS)” operating model. Whether public cloud is via Infrastructure-as-a-Service (IaaS), Platform-as-a-Service (PaaS), Software-as-a-Service (SaaS), or other variation, cloud services are a multi-provider (not singular) approach.

For instance, any given enterprise may use a combination of their own data center, supplemented by an IaaS, like Amazon Web Services; PaaS, like Microsoft Azure; and multitude of SaaS, such as Salesforce or similar providers.

According to the Uptime Institute’s 2019 Annual Data Center Survey results:

Most operators surveyed have a hybrid strategy. IT workloads are being spread across a range of services and data centers, with about a third of all workloads expected to be contracted to cloud, colocation, hosting, and Software-as-a-Service (SaaS) suppliers by 2021.

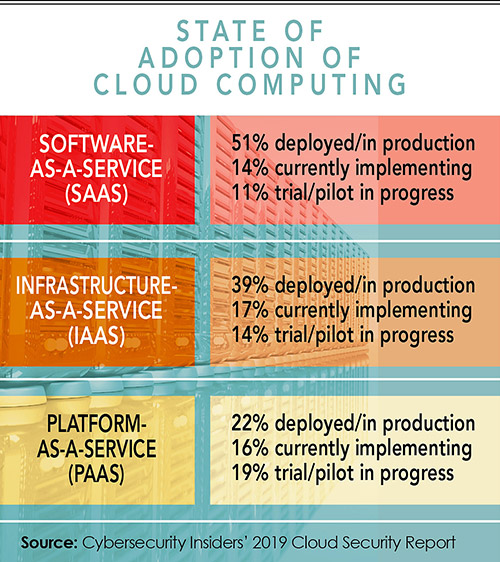

As the IT industry continues to evolve, so too will the data centers and infrastructure that support them. The accompanying chart shows the results of the Cybersecurity Insiders’ (a 400,000-member information security community) 2019 Cloud Security Report’s question on organizations’ state of adoption of cloud computing. In each of the responses, the majority of survey respondents were either deployed/in production, currently implementing, or were in trial/pilot in progress for Software-as-a-Service (SaaS), Infrastructure-as-a-Service (IaaS), and Platform-as-a-Service (PaaS) cloud solutions.

This tells us that the adoption of cloud services is not only multi-cloud in nature, but also mainstream in practice, suggesting that the hybrid model of operations will continue to evolve. To use another car analogy, groups of workers (VMs) can now carpool in the same van (host server) while also interacting with others both in and outside the van (cloud).

Edge Computing

In Network Computing magazine’s article“ Network Trends You Can Expect in 2020” (12/31/19),7 “Edge cComputing” was defined as “the concept of taking compute and data much closer to the end-user when compared to traditional cloud computing.”

Considering the hybrid model of IT operations most organizations now deploy (i.e., the combination of enterprise and third-party operated infrastructure), the interdependence of these facilities requires coordination and telecom bandwidth.

Replication of information between two data centers can be synchronous (simultaneous) or asynchronous (not simultaneous). While technology (fiber, routers, repeaters, etc.) may improve, the speed of light is constant, and the general thought today is that synchronous replication is possible under 100 kilometers of fiber length. If you go past that, there is a lag-time referred to as latency.

As an example, the round-trip (rt) latency between Los Angeles and Phoenix on AT&T’s network is currently ~10 milliseconds (ms) while the latency between Los Angeles and Denver is ~25 ms. If the maximum allowable latency between an EDC’s data centers is 25 ms, an EDC in Los Angeles may not consider locations past Denver.

Likewise, a Software-as-a-Service (SaaS) provider, hosting services for any given provider, will need to host those services as close to the end-user as necessary. This is in part “edge computing”.

Where costs to operate in one metro may be prohibitive, those services may be provided from another metro so long as it’s within an acceptable latency window. This explains why we see large-scale data center activity in markets like Phoenix and Las Vegas, where services are provided to organizations in Southern California metros.

Colocation’s Evolution to Hybrid Colocation

As identified in our 2015 article, colocation is a subset of the third-party operators (3POs) model, where providers build and operate data centers specifically with the intent to lease or license to third parties.

Acquiring colocation capacity is like a tenant leasing a suite in a multi-tenant building rather than building their own. Customers lease or license their specific number of cabinets or cage space along with a certain amount of power capacity (e.g., 5 kW/rack/cabinet) to house and operate their own IT hardware.

In the last few years, with the rapid adoption of various cloud services, many colocation facilities have evolved to a hybrid colocation model.

The facilities themselves have become an ecosystem where occupiers can cross-connect to cloud services within the walls of the colocation data center and mitigate outside telecom spend and latency. Whether that is to other cloud customers operating within the facility, or services provided by the colocation provider themselves, or connecting to an on-ramp/portal provider, having access to cloud services is becoming table stakes.

In our annual Cresa MCS Colocation Survey (year-end 2019), all seven colocation providers surveyed replied that there were cloud and/or other hosted services available to customers within their data centers. This only further supports the trend that hybrid colocation is growing in importance.

Back to the Fundamentals

In the end, most customers of colocation and cloud services are enterprise organizations, yet not all data center locations are the same.

Site selection for enterprise data centers within regional markets is a sequential process of elimination. So, consider for each potential data center location the following:

Do the properties have access to the appropriate —

Further details on site selection considerations for enterprise (EDC), Internet (IDC), and third-party operator (3PO) can be found in "The Changing Landscape of Data Centers".

As the IT industry continues to evolve, so too will the data centers and infrastructure that support them. Data centers are like cars in that they depreciate with age, efficiencies, and utilization. Going forward, future data center facilities will need to be designed to accommodate the scalable growth (space and capacity), flexibility, and proximities, with hybrid models top of mind.

- Power capacity and redundancy?

- Telecom capacity and redundancy?

- Water capacity and redundancy?

- Flight path

- Flood plain

- Accessibility and proximity (customers, talent, vendors, etc.)

Further details on site selection considerations for enterprise (EDC), Internet (IDC), and third-party operator (3PO) can be found in "The Changing Landscape of Data Centers".

As the IT industry continues to evolve, so too will the data centers and infrastructure that support them. Data centers are like cars in that they depreciate with age, efficiencies, and utilization. Going forward, future data center facilities will need to be designed to accommodate the scalable growth (space and capacity), flexibility, and proximities, with hybrid models top of mind.