Technology capacities that used to be reserved for a select few in the industry — such as government agencies, university researchers, and limited corporate groups — have now become mainstream. The growth not only follows Moore’s law of doubling of compute power but also relative storage of data principles. Lower cost storage devices, from SANs to consumer flash drives, are allowing for information to be saved at very low costs. The cloud is also helping us access that information virtually anywhere we have access to communication systems. The storage growth is driven by our ability to communicate easily through various mediums such as online images, electronic patient records, or emails.

While the affordability of computing and data storage has allowed us to utilize technology in ways that previously would have been prohibited by cost, the estimated seven billion users put a tremendous amount of stress on the data center market. More computing power and IT equipment usage means there is a need for more power and cooling to keep the systems running.

At the same time, this can only happen if the correct infrastructure is in place. The mechanical and electrical systems to support the data center computers still follow the traditional standards that were developed over 100 years ago and are very slow to change. As a result, some infrastructures are driving up the total cost of ownership (TCO) and now costing as much as or even more than the equipment housed within these facilities. In order to create data center facilities that will continue to work with the fast pace of technology, infrastructure systems must be adapted to meet today’s new standards.

Impact on Data Center Lifecycle

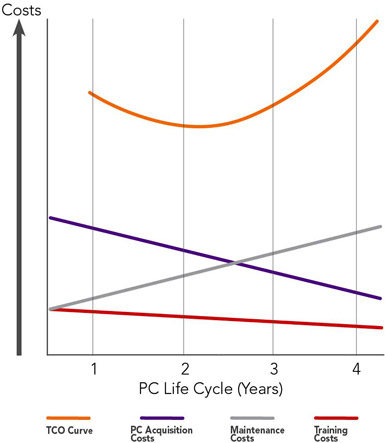

Aside from infrastructure costs, there are other factors that affect the TCO of data centers. The TCO takes into account initial infrastructure capital, the operating capital, and IT compute cost (such as refresh cycle and software).

While the affordability of computing and data storage has allowed us to utilize technology in ways that previously would have been prohibited by cost, the estimated seven billion users put a tremendous amount of stress on the data center market. Due to cheaper IT equipment costs, the infrastructure capital and operating costs are starting to approach and even exceed the IT costs. Cheaper compute cost and push in software and network technology have allowed failover redundancies that were not possible in the past, thus impacting the requirements of the infrastructure resiliency of the data centers. The IT failover redundancy is challenging the traditional resiliency of data centers where infrastructure redundancies were needed to support the mission. This change in application redundancy in lieu of infrastructure redundancy is causing re-evaluation of the TCO.

The impact of infrastructure redundancy requirements can cause variations in cost from lower redundancy systems to higher resilient systems, which can cost three times as much; additionally, the operating costs can be on the order of twice as much. Most infrastructure is designed for traditional brick-and-mortar buildings for up to 40-plus years, and the life of associated mechanical and electrical infrastructure can range from 20 to 40 years as well. The technology refresh cycle changes every 18 to 36 months. The difference in how technology has changed over the years compared to infrastructure equipment has created economic pressures to redefine the resiliency for IT compute.

Responding to the Market

In the past, the data center was designed for power consumption of less than 30 watts/square foot and required multiple levels of redundancies in electrical and mechanical systems at the facility. The objective was to ensure there was no environmental impact on the IT equipment and that it remained operational without regard to the environmental conditions beyond the pristine white raised floor on which the equipment was installed. The IT equipment was also larger and had lower compute capabilities, the amount of power consumed relative to the footprint was low, and the ability to deliver the power and cooling was easily matched with available infrastructure equipment.

The data center market has now reached a stage where power requirements can be high as 800 watts/square foot of compute. A data center can be 1,200 square feet with power requirements the equivalent of up to 500 houses. The major cost of construction of the data center involves the mechanical and electrical infrastructure, along with redundancy requirements for that infrastructure. This increase in power density is not solely because we are performing more compute; in fact, IT compute is consuming less energy per compute now than 20 years ago. The significant impact is sheer volume of compute. To respond to the market drivers, the following changes in resiliency are occurring:

- Reliance on software redundancy — Since compute is relative cheap, it is cheaper to build and operate two or more facilities with lower redundancy. Utilizing the TCO for life expectancy of the IT and infrastructure should be evaluated for validation.

- Procurement of next-generation IT compute to reduce power consumption at the existing facility — This extends the life of the data center. The next generation of compute is higher efficiency, and with consolidated compute structure, the existing building and infrastructure may continue to support the mission.

- Utilization of modular infrastructure equipment that is standardized — Customization costs significantly more than buying the “Model T.” Because the redundancy can be through various sites in lieu of single site, the modular standard solution can be utilized at the other data center location if the IT growth projections do not occur at one site compared to another.

- Utilization of a standardized solution — This provides a framework for operations that can be scaled and allows maintenance to be easily performed and optimized.

- Marrying and optimizing the IT with infrastructure — This has become a major push in the data center design and market. Aside from mechanical and electrical infrastructure cost due to redundancy, if engineered systems can be installed that are “right sized” for only the power and cooling consumed by the IT equipment, the stranded capacity in anticipation of potential future IT compute can significantly be reduced, directly impacting the capital and operating costs. To effectively monitor the IT compute, there must be provisions to accommodate rapid implementation of the infrastructure.

- Reducing the power needed to cool the IT compute — This is leading to some very innovative solutions, such as increasing the threshold temperatures the computers can withstand. Increasing the operating parameters of the servers has allowed development of innovative cooling systems. What used to be a ratio of power required to power compute of more than two times can now be 40 percent lower. These efficiencies are gained through mechanical systems such as direct air-cooling, matching voltage utilization, and implementation of monitoring and controls that can align the operation of infrastructure with IT compute needs.