How do I determine the right location?

It depends! Unfortunately, there is no one size fits all when it comes to data center site selection. We laugh when, undoubtedly, on every project some far-removed executive or senior leader begs the question, “Well, Facebook (Google, Microsoft, Amazon, etc.) just announced a data center in Sweden (Iceland, the North Pole, a container on ship, or other remote location); how come we are not doing that?” Well, for starters, you are not Facebook, Google, Microsoft, or Amazon. You do not have 30 data centers worldwide that can fail over to each other. It is likely that you, like most enterprises, have between two and five data centers that need to be located within some proximity of each other and your main user base in order to maintain operations. In order to determine the optimum location, leading enterprises start by determining any limitations to site location, then evaluate that search area to determine the optimum location for their risk profile at the lowest net cost attainable.

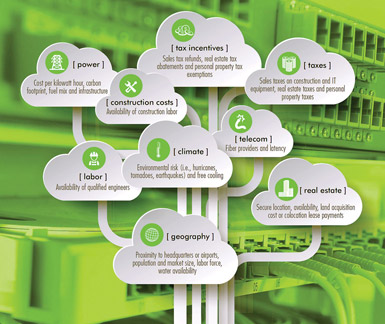

Once the search area is established, enterprises should engage in a systematic process of eliminating any locations in violation of absolute requirements, while evaluating and weighing discretionary and financial factors. An absolute criterion might consist of a distance threshold from an existing location; this could be due to operational benefits or perhaps risk avoidance of two facilities subject to the same hazard. Other factors may include a perceived risk of downtime.

For many, cost is the determinant factor in choosing the final location. An enterprise’s risk tolerance can vary dramatically and typically is driven by industry and operations. Banks, aerospace and defense contractors, and government user requirements tend to be the most risk-averse — meaning the cost of downtime far exceeds any financial benefit of any location. For example, a large financial institution determined that for every one minute of downtime, it incurred $7 million in lost revenue. For other clients, the risk of location is weighed with financial or operational benefits. When given a choice, all else being equal (i.e., good accessibility, a strong labor pool, and other industry- and client-specific factors), clients should avoid areas subject to wide area disruption (earthquakes, hurricanes, tornadoes, flood zones, etc.)

After eliminating locations subject to any absolute “no go” criteria, for many the determinant factor in choosing the final location is cost. While the cost of electricity is largely known to be a driver, you’d be surprised how many enterprises and executives fail to evaluate the impact of taxes until advised to do so. The determination and evaluation of both statutory and discretionary tax incentives for data centers can equate to millions of dollars in variance from one location to another. Other operational differences such as free cooling by the utilization of outside air, network costs, and labor costs can also drive an impact to the bottom line. At the end of the day, the net total cost — accounting for all IT and real estate expenses — for each location under consideration is evaluated to facilitate informed decisions.

With technology evolving so quickly, how do I know I am picking the right solution today that can accommodate the needs of tomorrow?

Data center technologies are constantly evolving, as are the physical requirements to support them. Most of the equipment used in data centers today did not exist 20 years ago. How can a company guarantee the solution that is best now will accommodate technologies that have not been invented and adopted yet? The short answer is it can’t, so companies must acquire and develop flexible solutions expressly designed to accommodate future change. Leading-edge companies are limiting exposure to future technological obsolescence by outsourcing to third parties, reserving shell capacity, and increasing the density potential of the solutions selected.

The move to outsourcing is the single largest trend among enterprise users. Whether that means a build-to-suit leaseback, wholesale colocation, or fully outsourced cloud solution, companies are leasing third-party solutions to avoid the risk of carrying highly capital-intensive, potentially technologically obsolescent assets on their own books. Financial benefits aside, leasing or outsourcing allows companies to secure short-term solutions based on what they know to be true today, while reserving the ability to modify in the near future as their own requirements and technology evolve.

In order to determine the optimum location, leading enterprises start by determining any limitations to site location, then evaluate that search area to determine the optimum location for their risk profile at the lowest net cost attainable. Regardless of whether a company outsources or decides to own and develop itself, smart enterprises are reserving shell space for future growth and development. For a large deployment, this might entail dedicated adjacent land for future development, or building out a shell building to accommodate quick, adjacent expansion. For a colocation user, this likely entails a right of first offer or refusal on adjacent critical areas. The overall cost of land, shell building, or expansion right is typically nominal relative to the potential cost of migration to a new location. One trend that has continued for some time is the increase in power density over time in the data center facility. Just a few years ago, most facilities were built to accommodate up to 150 watts per critical square foot. Today, with increased adoption of virtualization and high-performance compute environments, many facilities are being developed or acquired to accommodate 300 watts or more per critical square foot. Whether or not an enterprise is utilizing these densities today, they desire the ability to do so in the future.

How do I cover all bases and ensure a successful process?

The most successful projects are the ones that allow ample time for a thorough evaluation of all market alternatives driven by a team of seasoned experts, both internal and external to the enterprise, to facilitate informed decisions. Determining the optimum site location; engaging in a competitive negotiation process; and developing, commissioning, and migrating to a new data center can easily be a 36-month process. One of the biggest mistakes enterprises make today is not beginning the process early enough, leaving only a fraction of the opportunities or locations available for evaluation. This fatal error can result in tens of millions of dollars of increased cost over an analysis period.

The move to outsourcing is the single largest trend among enterprise users. While data center trends and market conditions continue to evolve, one thing remains constant in the life of the corporate executive: the importance of an auditable process. Given the large amounts of capital behind a data center acquisition, it’s not uncommon for these decisions to require board-level approval. A team’s ability to navigate, record, and display the data center acquisition process from start to finish is paramount to transaction success.

A typical enterprise might engage in a data center evaluation once every few years. With the evolution of technology, solutions and market dynamics changing daily, data from an enterprise’s last transaction is already obsolete. Coupling internal IT professionals who understand the “hows” and “whys” of the underlying requirements with seasoned third-party experts in the fields of data center incentives, site selection, and negotiation drives optimum results.

In Sum

As market dynamics continue to change, the solutions will be adjusted to match the expectations of data center users in the market — expectations corporate real estate executives are required to understand well. Smart companies engage in strategic planning months, if not years, in advance and evaluate existing solutions often in order to keep their companies ahead of the curve and operating most efficiently.