Artificial intelligence has quietly taken its place among construction crews. It doesn’t wear a hard hat or check in at the site trailer, but it’s working — designing layouts, forecasting delays, operating equipment, flagging safety hazards, and even managing buildings long after turnover.

AI’s footprint on jobsites is growing fast. So are its risks, and the law seems to be playing catch-up.

Construction contracts, by and large, don’t address the use of AI, which is becoming dangerous. Why? Because when algorithms start making decisions — especially the kind that affect budgets, timelines, safety, and design integrity — the question is no longer whether they’re helpful, but who gets sued when they’re wrong.

In light of this apparent inevitability, if AI is part of the project team, it needs to be accounted for in writing.

The Expanding Role of AI in Construction Workflows

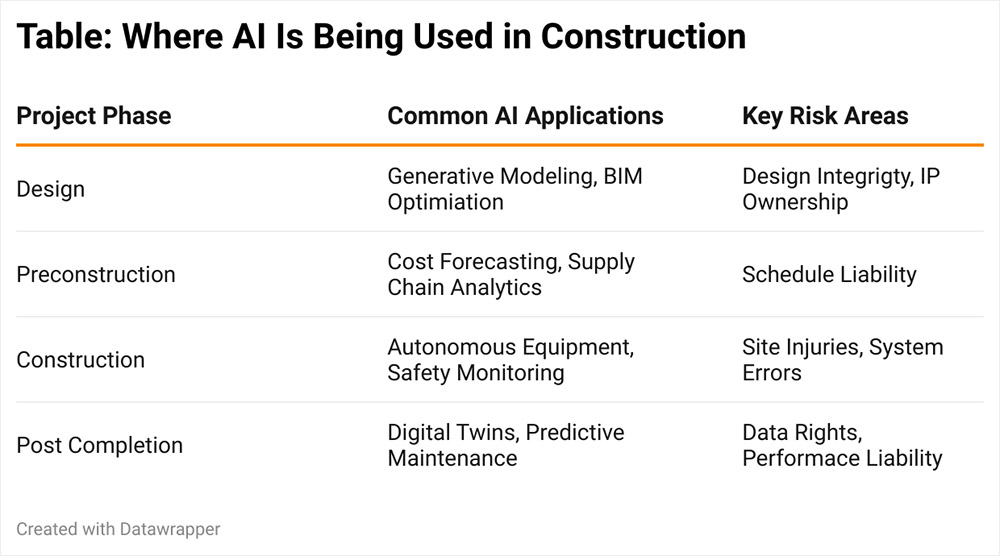

AI has moved well beyond the hypothetical in construction. Across every phase of a project, the technology is increasingly embedded in core processes.

In the design phase, AI-enhanced tools suggest optimal structural layouts and material combinations, often integrated within Building Information Modeling (BIM) platforms. During preconstruction, historical data is leveraged by algorithms to forecast project timelines, detect supply chain risks, and anticipate cost escalations.

AI doesn’t wear a hard hat, but it’s already part of the crew.

On active jobsites, autonomous machinery can now perform excavation, bricklaying, and inspections with AI-assisted precision. Computer vision systems monitor safety compliance, identifying PPE violations and flagging hazards in real time. After project completion, AI-driven digital twins can continue managing and optimizing building performance.

These tools are no longer passive assistants; rather, they’re decision-makers — and that brings profound legal and contractual implications.

Where Traditional Construction Contracts Fall Short

One of the most glaring gaps in standard construction contracts is the lack of direction when AI-driven tools malfunction or produce incorrect outputs. Consider a scenario where a generative design system proposes a structurally unsound layout that a designer follows in good faith. If the result is a failure, who bears responsibility — the designer, the contractor, or the software provider? Without express contract language, the outcome may hinge on unpredictable litigation.

48B

Standard form agreements, including widely used documents from the AIA and ConsensusDocs, were not drafted with AI in mind. As a result, they fail to account for crucial questions such as who’s responsible for selecting and configuring AI tools, who owns the data and digital content these systems generate, who assumes liability for actions or decisions made by autonomous systems, and whether AI malfunctions, cyberattacks, or system outages are treated as force majeure events.

The absence of contractual guidance leaves participants vulnerable to risk misalignment, unclear legal exposure, and potential insurance conflicts.

Legal Risk and Red Flags: How AI May Complicate Construction Liability

The legal implications of AI use in construction are becoming harder to ignore. As artificial intelligence tools take on more decision-making roles across project lifecycles, industry professionals and legal observers alike are beginning to consider how failures or misapplications of these technologies might give rise to disputes.

The question is no longer whether AI helps — it’s who gets sued when it’s wrong.

A range of hypothetical (but increasingly plausible) scenarios have surfaced within the industry. For instance, a machine-learning project management platform could produce inaccurate schedule forecasts, disrupting critical-path planning and potentially exposing contractors to liquidated damages. A BIM-integrated clash detection tool might overlook a major conflict between mechanical and electrical systems, necessitating costly rework. Safety monitoring systems powered by AI may fail to detect site hazards, resulting in injuries and liability exposure.

Additionally, questions surrounding the ownership of AI-generated digital assets — particularly when multiple parties contribute to their creation — could spark disputes over usage rights and intellectual property.

Beyond these scenarios, there are broader structural risks construction teams should keep in focus. These include unverified reliance on AI outputs without human oversight, failure to disclose the use of AI in critical decision-making, dependence on third-party platforms that offer limited warranties or indemnification, ambiguous ownership of digital content produced by AI systems, and inadequate cybersecurity protocols for cloud-based tools handling sensitive data.

These issues reflect a growing zone of legal and contractual ambiguity. The tools may be virtual, but the exposure they create is very real. And with traditional contracts offering limited guidance — many drafted before AI’s entrance into the construction lexicon — the need for updated legal frameworks is becoming increasingly urgent.

The Case for AI-Specific Contract Clauses

Given the rapidly evolving technological landscape, construction contracts must evolve as well. AI-specific clauses can offer a much-needed safeguard against the legal uncertainties posed by algorithm-driven tools.

An effective AI clause should include several core components. It should provide clear definitions — defining terms such as “artificial intelligence,” “autonomous systems,” and “machine learning tools” to ensure shared understanding. Disclosure requirements should compel parties to disclose their use of AI tools and provide notice of any material changes during the course of work. Verification standards should require licensed professionals to review and approve all AI-generated outputs before use or implementation.

One

Liability and indemnity provisions should assign responsibility to the party selecting or configuring the AI and require indemnification for harm caused by improper use or malfunction. Intellectual property and data rights must specify ownership and permitted use of AI-generated content, particularly as it relates to reuse, modification, or post-project application. Finally, force majeure considerations should address whether AI-related outages or cyber events qualify as excusable delays, and audit rights should permit review of AI performance data and decision logic in the event of a dispute.

Rewriting the Rules for a Machine-Enhanced Industry

AI is now a fixture on jobsites. Whether optimizing designs, predicting risk, or managing safety, these tools are embedded in the project lifecycle. Yet construction contracts — long the industry’s instrument for assigning responsibility and managing risk — often fail to acknowledge this reality.

Construction contracts are overdue for a software upgrade.

Contractors, subcontractors, developers, and design professionals all stand to benefit from proactive legal language that clearly defines how AI is used, who is responsible for it, and what happens when it fails.

In a world where critical project decisions may be made by code rather than people, failing to address AI in contractual terms is no longer an oversight — it’s a liability.

The most consequential contributor to a project may not appear in the organizational chart or the contract’s list of parties. But if it’s shaping designs, scheduling work, or managing site safety, its presence must be addressed. Construction contracts are overdue for a software upgrade, and the AI clause may be the patch that keeps risk — and responsibility — in balance.